Ceph RBD Integration

Ceph RBD (RADOS Block Device) is a critical component of the Ceph distributed storage system, providing enterprise-grade, software-defined storage that delivers scalability, reliability, and high availability for virtualized environments.

Prerequisites

This guide assumes you have an operational Ceph cluster configured with either Erasure Coding or Replication-based redundancy.

Initial Configuration

Create Ceph Authentication Credentials

Generate authentication credentials for the hypervisor client with appropriate permissions:

ceph auth get-or-create client.hypervisor mon 'profile rbd' osd 'profile rbd pool=<POOL_NAME>'

Expected output:

[client.hypervisor]

key = AQAJmABpozPUFxAAMSjKlPuGYA6C3svHvJyFNQ==

Install Required Dependencies

Install the following packages on all hypervisor nodes:

qemu-block-extra

ceph-common

Hypervisor Integration

Configure Libvirt Secret

On your first hypervisor, create a Libvirt secret for secure Ceph authentication:

cat > secret.xml <<EOF

<secret ephemeral='no' private='no'>

<usage type='ceph'>

<name>client.hypervisor secret</name>

</usage>

</secret>

EOF

virsh secret-define --file secret.xml

Expected output:

Secret 88ced2a0-8b9c-44bd-9efb-1979cac33f24 created

Note: Save the generated UUID for storage configuration in the Management Server.

Set Secret Value

Configure the secret with your Ceph authentication key:

virsh secret-set-value --secret <UUID> <USER_KEY>

Parameters:

UUID:88ced2a0-8b9c-44bd-9efb-1979cac33f24(from previous step)USER_KEY:AQAJmABpozPUFxAAMSjKlPuGYA6C3svHvJyFNQ==(from authentication credentials)

Expected output:

Secret value set

Configure Ceph Client

Edit the Ceph client configuration file /etc/ceph/ceph.conf:

[global]

mon_host = <MON_HOST_1> <MON_HOST_2> <MON_HOST_3> ...

[client.hypervisor]

key = AQAJmABpozPUFxAAMSjKlPuGYA6C3svHvJyFNQ==

Verify Connectivity

Test the connection between your hypervisor and the Ceph cluster:

ceph version --id hypervisor

Expected output:

ceph version 16.2.15 (618f440892089921c3e944a991122ddc44e60516) pacific (stable)

Replicate Configuration Across Hypervisors

For additional hypervisors in your cluster:

- Copy the contents of

/etc/libvirt/secretsfrom the first hypervisor to all other hypervisor nodes - Restart the libvirt daemon to update the internal database:

systemctl restart libvirtd

Important: This command will restart libvirt services. Exercise caution when running virtual machine instances. Plan maintenance windows accordingly.

Management Server Configuration

Configure Storage Pool

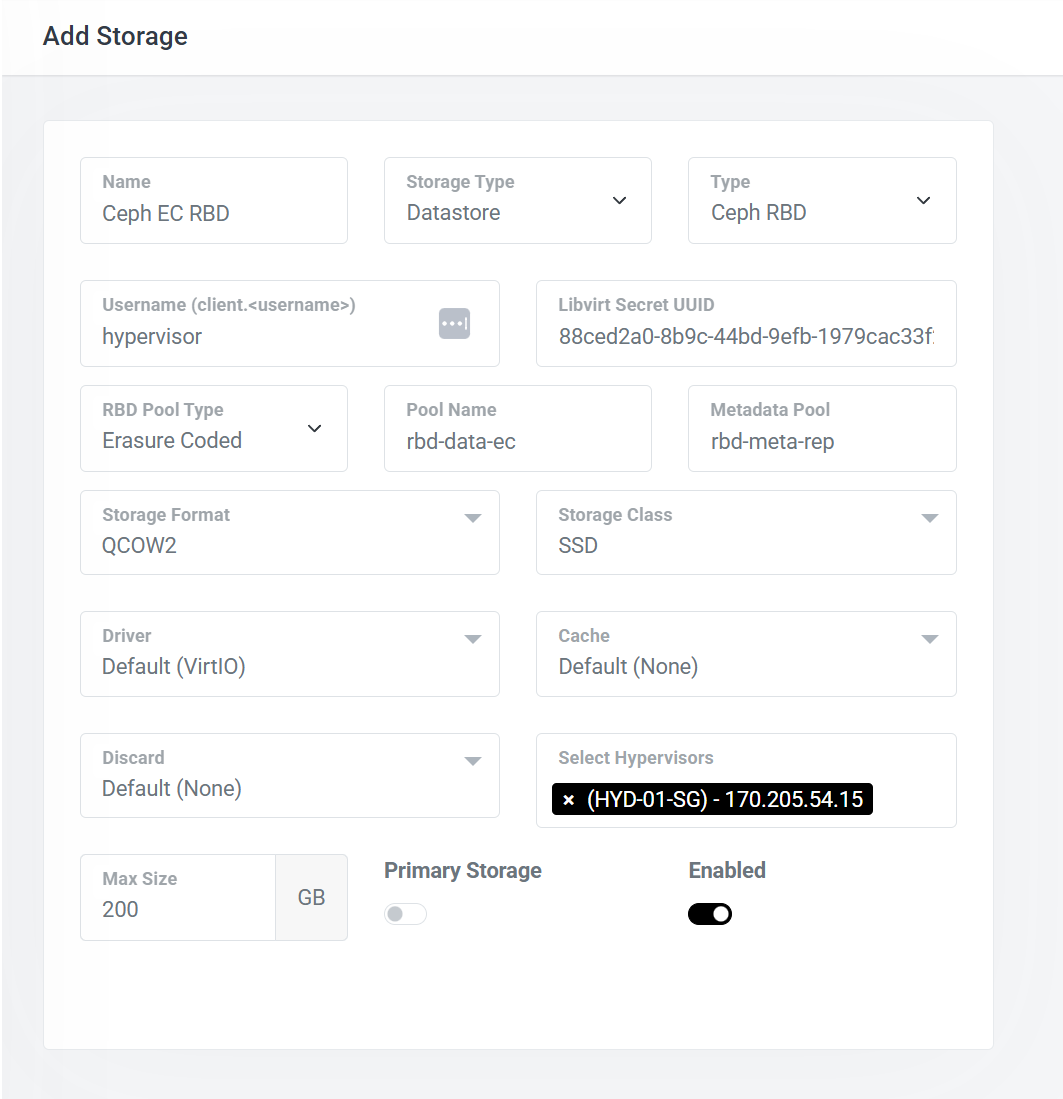

Navigate to Admin Navigation Sidebar > Compute > Storage, then click Add Storage.

Configuration parameters:

- Pool Name: Enter your Ceph pool identifier

- Max Size: Configure the oversubscription limit for your storage pool

- Provisioning: Ceph RBD supports thin provisioning (similar to QCOW2), enabling storage oversubscription

- Cache Method: Set to default (no specific cache method)

- Storage Class: Configure appropriately to enable tiered instance plans

Important configuration notes:

- Thin provisioning allows you to allocate more virtual storage than physical capacity

- Default cache settings ensure optimal performance across various workloads

- Storage class configuration enables differentiated service tiers

Best Practices

- Monitor storage utilization regularly to avoid oversubscription issues

- Implement proper backup strategies for critical workloads

- Configure appropriate CRUSH rules for data placement

- Test failover scenarios before deploying to production

- Document your Ceph cluster topology and configuration

For additional information on Ceph RBD optimization and advanced configuration, refer to the official Ceph documentation.